-

Notifications

You must be signed in to change notification settings - Fork 559

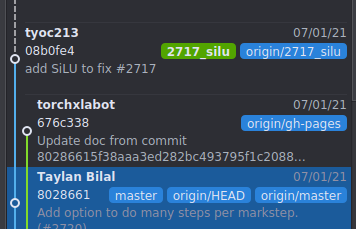

add SiLU to fix #2717 #2721

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

add SiLU to fix #2717 #2721

Conversation

|

if something needs to be fixed, will also add the |

JackCaoG

left a comment

JackCaoG

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Thanks for the contribution, couple nits

|

@JackCaoG just hit Even if I stash my changes the same error shows For the moment will clean and build again, but there is other way? |

This usually means there is a mismatch between what we overwrite and what pytorch provided. The most common reason is pytorch update the signature but we didn't follow. Looking at the circleCI run it seems like pt and pt/xla head is fine, could you try to rebase and rebuild? |

|

mmm if rebase means to be pointing to 8028661 that is OK I tried to clone again the repo&branch and sync submodules but still the same error. The only missing thing would be to update pytorch which I was some commits behind and see if that fixes it. Still dont know why the initial commit on this branch didn't show the error until now? (I mean it even passed the little python test). |

|

@JackCaoG I think I got why it worked before, I was some commits behind in pytorch and xla and I started to work on that setup when I got something working, I stashed it and updated xla to make the PR branch and pushed it. Now I have updated pytorch but Im getting this error in master for xla after update: It is ok to continue reporting this here? or should I open an issue? |

|

Now it almost there, but I guess it is by the warning? |

|

yea, that seems to be where the error coming from in my log I saw |

|

@JackCaoG I guess it is something in the path? or know what I need to change to make it work? (Im searching but havent found an "easy fix") |

|

After some search it seems like that warning does not cause the compilation failure. the real error seems to be Does it fail with master? Did you see this error before? |

|

@JackCaoG no, I haven't seen this error before and yes for me it also happens in master. The end on master shows the same error If you see it says |

|

the problem seems that cmake (or make?) seems to not find

I had cmake 3.16, just compiled 3.19.2. (I also have GNU Make 4.2.1) But And I wonder what is happening. But the .so is there... |

|

I think I "found" the error, but dont know why it didnt happen before, the path What exist is up to this place And it only included those 2 so I made the path So I try again on master.... But dont know if I need to compile two times one to generate the current .o and the second want after updating manually the lib in the desired path |

27d21dc to

01eb9a9

Compare

|

cout/cerr is fine.

it sounds like pt/xla does not return the correct tensor to the upper pytorch layer. Having a gdb and set break point on pt/xla functions you defined and inspect the value of the tensors should help

Can you give me a more concrete example? |

|

I was trying to do some like NodePtr SiLU(const Value& input, const Value& output) {

auto lower_fn = [](const Node& node, LoweringContext* loctx) -> XlaOpVector {

xla::XlaOp xla_input = loctx->GetOutputOp(node.operand(0));

xla::XlaOp output = loctx->GetOutputOp(node.operand(1));

return node.ReturnOp(BuildSiLU(xla_input, output), loctx);

};

}And then // NOTE: there are no `xla::XlaOp& output`like params in the rest of the file

xla::XlaOp BuildSiLU(xla::XlaOp input, xla::XlaOp& output) {

const xla::Shape& shape = XlaHelpers::ShapeOfXlaOp(input);

xla::XlaOp half = XlaHelpers::ScalarValue<float>(0.5, shape.element_type(),

input.builder());

output = input * (half + half * xla::Tanh(half * input)); // IIRC this fails

return output; // And this will be weird because value "would be" already set if the line above worked

} |

I don't think you need to manually pass the output and then do a In BuildSilu, you can even or you don't need to have this helper function and do

|

|

I see, you can flag the change as review if you preferr one or the other instead of how I have it in code. The "sample above" was one of the things I was trying to do before "latest working commit". Before I didn't know where to stop thinking of this

So I thought that if I used the other operations at that level it will emit |

|

I think I will prefer the latest way without helper function if it emits correctly. Is there a way to stop the build process on CI? Or should I just commit again? I guess I will just ammend latest commit so that |

JackCaoG

left a comment

JackCaoG

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@tyoc213 Thanks for the contribution! Very minor nits, otherwise LGTM.

ir graph only being effected by |

JackCaoG

left a comment

JackCaoG

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Great work! I will merge it once all tests passed.

|

Yey! Thanks, was thinking of the other two ops that you said before (except if you have any other "easy one to lvl up"), do you have any plans on taking them in the near future? and to that point And was thinking also maybe open an issue regarding the path Extra Q: those build can be installed/downloaded or only the nightly build is available? Extra Q2: the only way to dump the graph is with the report that makes a zip file? |

What do you mean by

feel free to open the issue but it would be a bit hard to debug if I can't repo it locally.

what do you mean? You can also build the pt/xla from scratch, we also published built wheel everyday.

there are two api you can use at anytime |

Inside pytorch there is a

yeah, I was thinking of the time because I too think it will more time (maybe 2-5 weeks hopefully), and most probably will make some mistakes on the way, but if that will take more than what you have in mind for this op(s), will wait/see if there is another I can do or just continue checking the rest of the code.

Oh yeah, I mean, this CI builds can be used from colab? or only the ones of the nightly? |

I am actually not sure about that file, @ailzhang any ideas?

That time sounds reasonable to me.

I don't think you can use CI build for cloab |

|

I might start taking a look at next op which I guess will be 2d :) |

- `SgnOp` and `SignOp`

- Full codegen migration: #3577

- Mistakenly re-introduced: #3572

- `LogSigmoid`

- Introduced: #3539

- Full codegen migration: #3743

- `SiLU`

- Introduced: #2721

- Full codegen migration: #3780

- `SiLUBackward`

- Introduced: #3195

- Full codegen migration: #3780

- `SeLU`

- Introduced: #3547

- Full codegen migration: #3780

- `Sigmoid`

- Introduced: 6a73deb (no PR record)

- Full codegen migration: #6342

I had this version and also one implemented with

mulandsigmoiddirectly inaten_xla_typewithout going "down" totensor_methodsas this one is that preffered?I dont think I need to add a test case, but if I need to where I add it or what I would need to test?

My local test was something like

it prints

Waiting for review.

For the issue #2717