-

Notifications

You must be signed in to change notification settings - Fork 547

Optim-WIP: Preliminary transform unit tests & more #521

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

* No idea if this will work. * Not sure how I'm supposed to be writing these tests, how extensive they need to be, or if what I have so far is okay. * Still need to add some tests for GaussianSmoothing with different dimensions.

So, I added a try-except block to deal with the missing |

|

@NarineK Is adding This PR doesn't required PIL / Pillow or tqdm, so this is more about future test PRs. |

|

There's a slight issue with indexing tensors with named dimensions. This may present an issue for future PRs until it's fixed. |

|

@NarineK There shouldn't be anymore major changes to this PR right now, so you can review and merge it! |

Thank you very much for working on this, @ProGamerGov! I'll make a pass this week. |

|

@NarineK I added type hinting to all the optim I made a pull request to add the ending type hint stuff to all the |

da78e9a to

f43a11d

Compare

NarineK

left a comment

NarineK

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Thank you for addressing the comments @ProGamerGov!

I made couple more nit comments.

| A workaround when there is no gradient flow from an initial random input. | ||

| ReLU layers will block the gradient flow during backpropagation when their | ||

| input is less than 0. This means that it can be impossible to visualize a | ||

| target without allowing negative values to pass through ReLU layers during |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Thank you for adding description, @ProGamerGov! Is this replacement necessary if the target layer is a ReLU or is this necessary in general for all ReLU layers in the model?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

It is necessary for all ReLU layers in the model, regardless of what the target layer is.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Thank you for the explanation, @ProGamerGov! Is this related to dead ReLU problem that Ludwig mentioned in his original PR ?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Yeah, it's a solution to the dead ReLU problem!

| def backward(self, grad_output: torch.Tensor) -> torch.Tensor: | ||

| (input_tensor,) = self.saved_tensors | ||

| grad_input = grad_output.clone() | ||

| grad_input[input_tensor < 0] = grad_input[input_tensor < 0] * 1e-1 |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

nit: why is it necessary to multiple by 0.1 here ?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I'm not 100% sure why it's done so I've reached out to @greentfrapp for exactly why gradient scaling is required.

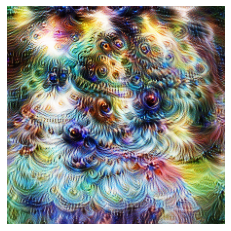

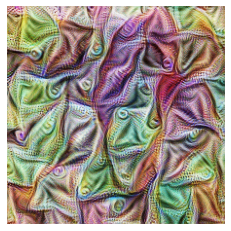

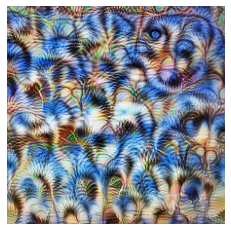

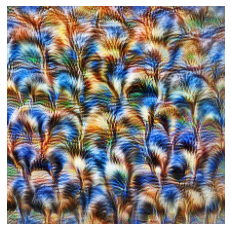

I also ran some tests and found that removing the scaling seemed to allow features that shouldn't be in the visualization (tests are from the InceptionV1 tutorial):

So, it looks like gradient scaling is required if we remove the normal backward ReLU code, but I'm not sure why scaling by 0.1 was chosen vs other numbers.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

So, @greentfrapp just chose 0.1 as a way of handling the gradient flow in a way that wouldn't mess things up. In Lucid, Lubwig let everything through with the same weight / scale for the first 16 optimization steps.

I think @greentfrapp is working on a more advanced version of RedirectedReLU that more closely resembles the one in Lucid, but for right now the current version is sufficient.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Thank you for the explanation @ProGamerGov! Perhaps, we can write a sentence documentation about it so that we remember.

In the example above, does each image correspond to a step in the process of the optimization without using 0.1 multiplier ?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@NarineK The images were just the same ones as in the InceptionV1 tutorial notebook as I reran all the cells in the notebook without multiplying by 0.1 in RedirectedReLU. They aren't different steps. They are different targets and parameters from the tutorial notebook.

@greentfrapp has put together a more a better and advanced version of RedirectedReLU in his pull request, so I'll leave the RedirectedReLU version in this pull request as it currently is for merging. #552

|

|

||

| from captum.optim._param.image import transform | ||

| from tests.helpers.basic import BaseTest | ||

|

|

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I was thinking that if those transforms already exist in numpy or scipy them we could compare our implementation with theirs but we can do that later.

| rr_loss = rr_layer(x * 1).mean() | ||

| rr_loss.backward() | ||

|

|

||

| assertTensorAlmostEqual(self, t_grad_input[0], t_grad_output[0], 0) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Why do we need t_grad_input and t_grad_output as arrays if we are using only the first element ?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Lists can be appended from inside the hook function whereas other variables cannot, I think.

|

@NarineK I added NumPy comparison tests for 4 of the transforms! The other tests are not easily recreated in NumPy or there is no need for NumPy versions. |

f11fdb4 to

aabbd62

Compare

f8da94d to

9a79c78

Compare

bb64aef to

93275f9

Compare

|

@NarineK @greentfrapp and I had to make some changes to the FFTImage classes to handle the PyTorch fft depreciations, but it should be ready for merging once you've finished reviewing the code! |

|

Thank you, @ProGamerGov ! I'm merging now in order not to not make other PRs to wait. I will take another look later into more details. |

I've added the first batch of unit tests. I'm not sure if they need to be more in depth / test more stuff? Other unit tests are currently on hold due to potential changes to how the objective, optimization system, and ImageTensor will work. Once @greentfrapp finishes those changes, then we'll write tests for them.

Added unit tests for all of the optim transform classes and functions.

Added unit tests for FFTImage.

Added unit tests for PixelImage.

Added unit tests for loading and running pretrained InceptionV1 model.

Fixed a number of the optim transforms that hadn't been tested thoroughly yet.

Added warning about

tqdmpackage being required. It's pretty much the exact same as we added for PIL / Pillow.Moved some of the general model functions & classes to their own file. These functions and classes can be used on / with other models, so it makes sense to separate them from the Inception 5h model.

Added unit tests for all of the

_utils/modelsfunctions & classes.Added function to get all hookable model layers.

Added RedirectedReLU example to Torchvision notebook tutorial.

Added a ton of missing type hints.

Added functions for calculating the Karhunen-Loève (KLT) matrix of custom image datasets. The current ToRGB transform uses an ImageNet KLT matrix, but users may not always be using models trained on ImageNet. Added new tests the new functions.

Readded Ludwig's Conv2dSame with tests and type hints.