-

Notifications

You must be signed in to change notification settings - Fork 4k

Single Active Consumer for streams #3754

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

dec5949 to

fc735bd

Compare

425f184 to

3e47849

Compare

30221d4 to

273ee4d

Compare

fee80a1 to

b7b2311

Compare

fd04c1d to

414dead

Compare

|

A/C: Start the brokerRemove the Docker image if it's already there locally, to make sure to pull the latest image later: docker rmi pivotalrabbitmq/rabbitmq-streamStart the Broker: docker run -it --rm --name rabbitmq -p 5552:5552 -p 5672:5672 -p 15672:15672 -e RABBITMQ_SERVER_ADDITIONAL_ERL_ARGS='-rabbitmq_stream advertised_host localhost' pivotalrabbitmq/rabbitmq-streamEDIT after merging: the Docker image to use was Get the CodeNB: requires JDK11+ Get the stream Java client, compile it, get the examples project: cd /tmp

git clone [email protected]:rabbitmq/rabbitmq-stream-java-client.git

cd rabbitmq-stream-java-client

git checkout single-active-consumer

./mvnw clean install -DskipTests

./mvnw clean package -Dmaven.test.skip -P performance-tool

cd /tmp

git clone https://github.com/acogoluegnes/rabbitmq-stream-single-active-consumer.git

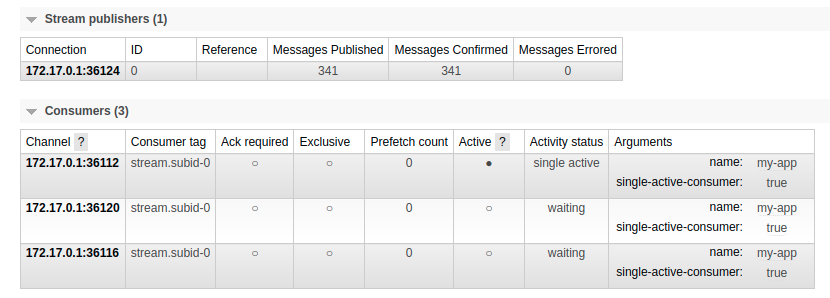

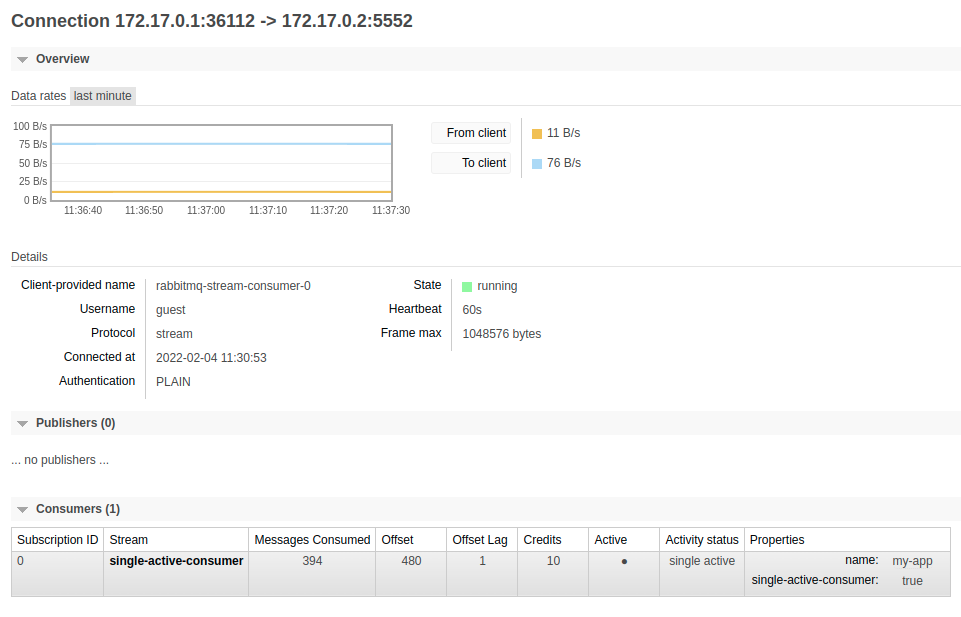

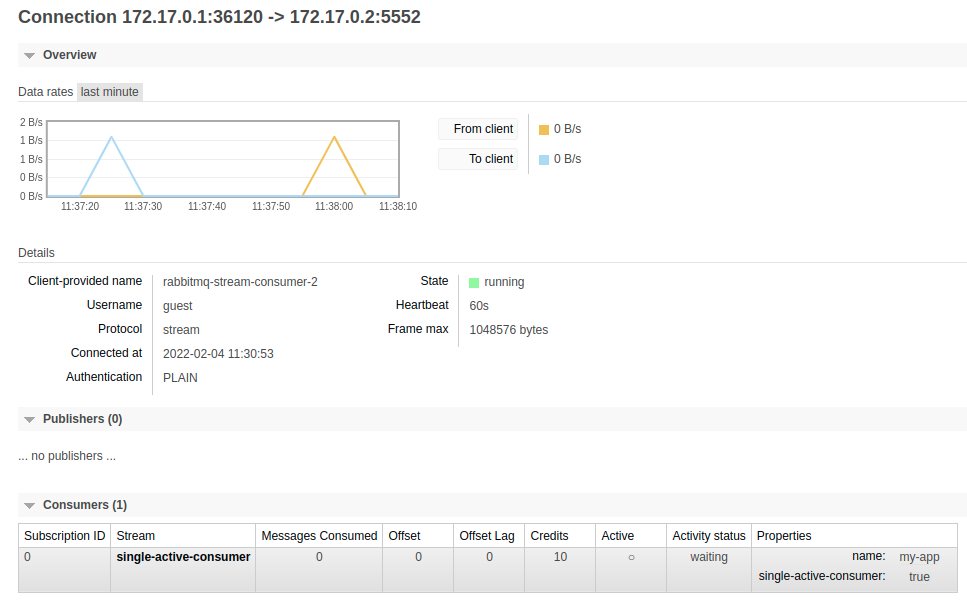

cd rabbitmq-stream-single-active-consumerSingle Active Consumer with 1 StreamStart the consumer program: The consumer program creates a NB: more information on the single active consumer support in the stream Java client documentation Start publishing with (The performance tool output a warning about the creation of the stream, it's normal) The consumer program should output something for each message: List the stream consumers with You should see the 3 consumers. Only one should be active. If there were other consumers in this virtual host, we should see them as well, but we have registered only 3 of them. List the consumer groups with You should see our group, with its stream and reference ( List the consumers of the group with You should see the 3 consumers of the group, with their subscription ID in the connection, their owning connection, and their state. This command lists only the consumers of a given group, not other consumers. Go to the page of the stream in the management UI: You should see the consumers with the same details as in the output of the Click on the link to the connection (channel column) of the active consumer: This is a stream-specific page, so you should see extra information about the consumer (consumed messages, offset, etc). Check the state column says the consumer is active. Go back to the Check the state column says the consumer is not active. Now go back to the page of the connection of the active consumer and close the connection (link at the bottom of the page). The output of the consumer program should say the next consumer took over: Check the consumers of the group with There should still be 3 consumers, because the stream client recovered the closed connection. The consumer next in line should be the active one now (in our example the consumer on port 36116 was the second in line, so it got promoted when the active consumer was closed). Stop Delete the stream: Single Active Consumer with a Super StreamCreate an This should create an Start a first instance of the consuming application: The application starts a "composite consumer" for the super stream. This is a client-side feature. The client library registers a consumer to each partition (stream) of the super stream. Consider this program as an instance/VM/container/pod of a user application. NB: more information on the single active consumer and super stream support in the stream Java client documentation Start the publishing application: The publishing application uses a "composite producer", which is also a client-side feature. The client library creates a producer for each partition and each message is routed based on a client-side routing strategy (in this case, hashing the ID of the message, which is an incrementing sequence). The application publishes a message every second, which means a message ends in one of the partition every 3 seconds (if the hashing is well-balanced, which it should be). The consumer application should report messages: The application reports for each message its ID and the partition it comes from. The messages should be well-balanced between partitions (but it's not round-robin!). List all the stream consumers with You should see the 3 consumers of the "composite consumer", one for each partition, all active (each is the only one on a given partition). List the consumer groups with A consumer group for each partition should show up. They should each report only one consumer (there's only 1 instance of the application so far). Let's use The group has only one consumer. Start a second instance of the consuming application: This second instance will also create a composite consumer and the broker will start dispatching messages from So The broker rebalanced the dispatching of messages: the List all the stream consumers: The list should confirm that one former active consumer (in our case the one from connection Listing the consumer groups should confirm there are 2 consumers on each partition now: Start now a third instance of the consuming application: This third instance should get messages from The first instance should get only messages from

List the consumers of the consumers on the first partition: There should be 1 active consumer (the one from the first instance, which is active since the beginning) and 2 inactive consumers (the one from instance 2 and 3, that we started after instance 1). Stop

Listing the consumers of the group for Stop Stop

Trying to list the consumers of the group on Stop the publisher. Stop the broker Docker container. |

dcc47fd to

6c1129e

Compare

|

This pull request modifies the bazel build only. Should the makefiles be updated as well @acogoluegnes? |

This is fine. New test suites require a build file entry, due to fine grained dependencies, which is not the case in erlang.mk |

6c1129e to

f1beb12

Compare

905bf45 to

020d7be

Compare

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Thank you, looks very good overall. I did the acceptance. Worked for me.

I left some small inline comments.

More general questions are:

(1)

I'm missing declaring a SAC stream via AMQP / Management API. Will that be added in a future PR or should it be part of this PR or is that not planned at all?

Also when listing the queues in the Management UI, it would be nice to see the SAC feature icon (as displayed for other queue types).

(2)

In the example above:

Stop instance-1 (the first you started) with Ctrl-C. This should trigger a significant rebalancing:

instance-2: formerly invoices-1, now invoices-0 and invoices-2

instance-3: formerly invoices-2, now invoices-1

It would be nice if there would not be a "significant" rebalancing. So, in this case I would have expected instance-2 to continue with invoices-1 and instance-3 to continue with invoices-2 while instance 2 or 3 gets additionally invoices-0. I'm thinking about stream processing applications with more than only 3 instances and only 3 partitions. In this case if a single instance crashes, all other instances will need to stop their stream processing (for example aggregate calculations) and start with consuming from new stream partitions even though in above example they could have just continued if there wasn't a "significant" rebalancing happening.

|

@ansd Thanks for the review. I'll have a look at the code comments. Some answers below.

There's no such thing as a SAC stream. An application sets a property when registering a consumer to declare it's a single active consumer. It must also sets the "name" of the consumer, that is some ID that the several consumer instances should share.

The SAC semantics between queues and streams are different. For a queue, all consumers are the same (they don't have a "name"), so the queue is declared as SAC-compliant and the SAC behavior is enforced for consumers registering to it. It's different with streams. You declare a regular stream and consumers registers to it, and they can SAC or regular consumers.

We use this simple and predictable rebalancing algorithm with a modulo on the list of consumers registered to a stream. It's stream-specific, which means there's no coordination between the streams composing the super stream. It gives good results without too much hassle, but it can get a big dumb for rebalancing. We're still to open to find another mechanism that good give better results, especially for the rebalancing. |

fd83b9f to

66b5b37

Compare

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

looks good, a few smaller fixes

ead0c9b to

23b8a5d

Compare

|

I did the acceptance and it worked for me too. I only have the following comments below. Testing SAC =>

Testing Super Streams =>

|

|

My answers below.

This is expected, the producer connections handle the offset storage. There's 1 connection per consumer and tracking consumer to simulate independent application (in different OS processes). This is set up in the program: try (Environment environment =

Environment.builder()

.maxConsumersByConnection(1)

.maxTrackingConsumersByConnection(1)

.build()) {

...

}

This should not happen, can you tell me how to reproduce?

This is a bug that impacts streams in general, so nothing specific to SAC or super streams.

This is a good point, there's no "global" way to monitor a super stream, some CLI commands would be a good start. This may be out of the scope of this PR for now.

Sounds good, will do. |

In the regular metrics ETS table, not the one from streams. References #3753

It was broken after introducing the "connection label" field for the list_stream_group_consumers CLI command. References #3753

Block stream SAC functions if the feature flag is not enabled. References #3753

References #3753

Stream consumers can be active or not with SAC, so these 2 fields are added to the stream metrics. This is the same as with regular consumers. References #3753

For stream consumer groups CLI commands. This is useful in case new fields are needed in further versions. A new version node can ask for new fields to an old version node. If the old version node returns a known value for unknown fields instead of failing, the new node can set up appropriate default value for these fields in the result of the CLI commands. References #3753

The UI handles the case where the 2 fields are not present. This can happen in a mixed-version cluster, where a node of a previous version returns records without the fields. The UI uses default values (active = true, activity status = up), which is valid as the consumers of the node are "standalone" consumers (not part of a group). References #3753

A formerly active consumer can have in-flight credit requests when it becomes inactive. This commit checks the state of consumer on credit requests and make sure not to dispatch messages if it's inactive.

And do not do anything if it's not. References #3753

Single active consumer must have a name, which is used as the reference for storing offsets and as the name of the group the consumer belongs to in case the stream is a partition of a super stream. References #3753

References #3753

6328b84 to

85b0625

Compare

ConsumerUpdatecommand in the stream protocol to notify consumers of their state (active or not) and to let the active consumer decide where to consume fromlist_stream_consumer_groupsandlist_stream_group_consumersCLI commands to list the groups of a given virtual host (virtual host, stream, and name of the group) and to list the consumers of a given group (owning connection, state, etc), respectivelyactiveandactivity_statusfields in the consumer metrics (for management plugin) andlist_stream_consumersCLI commandstream_single_active_consumerfeature flagStream Java Client documentation:

References #3753

References rabbitmq/rabbitmq-stream-java-client#46