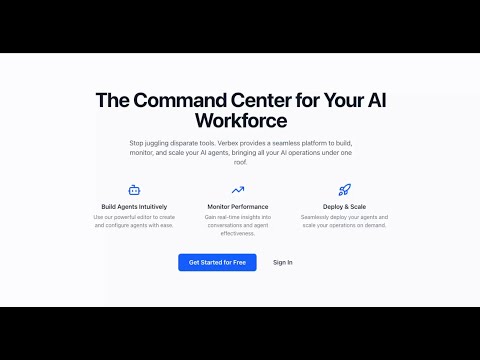

AgentsHub is a full-stack application designed to provide a seamless platform to build, monitor, and scale AI agents. It features a command center dashboard, multiple backend microservices, and a complete containerized setup for easy deployment.

The application is built on a microservices architecture, with Next.js frontends and Spring Boot backends, all containerized with Docker.

The backend consists of 4 main microservices:

- Auth Service - Authentication & user management

- Agent Service - AI agent CRUD operations

- Chat Service - Chat & LLM integration

- Analytics Service - Conversation tracking & metrics (Not implemented yet. But this functionality is integrated into

Chat Servicefor now. I'll separate this functionality into a dedicated microservice later.)

The frontend consists of 2 individually deployable Next.js projects.

- Dashboard - Landing page, authentication, admin functionality, analytics view.

- Chatbox - Literally the embeddable chat UI only.

Initially I had a plan to implement Spring Cloud Gateway. But I choose to not doing that. Instead I took leverage of having built-in backend of Next.js projects. Next.js backend is essentially performing the API gateway tasks. Let me explain with some examples.

- Routing requests - I've given individual names to my backend servers, for example authServer, agentServer, chatServer, etc. Then the communication flow happens step by step like this.

- From the client side when I send a request to the backend, I attach one of these names as a prefix of the path. For example: http://dashboard-app:3000/authServer/auth/login

- Next.js middleware (runs securely in backend) forward the request to intended server based on the prefix. For example the request in the previous step will be translated and forwarded to: http://auth-server:8080/auth/login

- Authentication & Refresh token

- When users' login request succeeded, the Auth Server returns

access_tokenandrefresh_tokenin the body of the response. - Next.js middleware intercepts the response and construct a new response with

Set-Cookieheaders to set secure http-only cookies for theaccess_tokenandrefresh_token. Therefore the client side in the browser gets secure tokens inaccessible by javascript, and ensured strong security against hackers. - When an authenticated request flows from client to server, same as before the middleware intercepts and move the tokens from cookie to

Authorizationheader as aBearertoken. - Middleware also handle refresh token strategy for a request that is rejected with 401 or 403 response status.

- When users' login request succeeded, the Auth Server returns

Follow these steps to run the entire application stack on your local machine using docker. (If you just want to check how the app works, then this is your way to go. If you plan to develop and contribute, then follow the "Run for Development and Contribution" section).

- Docker

- Docker Compose

- Node.js

-

Clone the Repository.

git clone https://github.com/mazeduldev/ai-agent-manager.git

-

Configure environment. All the project directories including the root directory have

.env.examplefile. You have to create a.envfile with similar values. Running the following command will automatically do this for you.node ./_scripts/env_init

-

Prepare environment to run the app in docker container. In docker network

localhostwill not work. Service to service communication must use service names in docker-compose file. So, service URLs in .env files needed to be updated. Run the following command to do this.node ./_scripts/env_switcher docker

-

Very Important: you must provide your own OpenAI API Key.

/backends/chat/.envfile requires OpenAI API Key. I'm not providing my one here :) -

Build and Run with Docker Compose. Following will build all the service images similar to a production build and start the containers.

docker-compose -f docker-compose.prod.yml up --build

-

Access the Applications. Once all containers are running, you can access the services at:

- Dashboard: http://localhost:3000

- Chatbox: http://localhost:4000/chat/{agentId}

- Database (PostgreSQL): Connect via port 5432

-

Infrastructure: I strongly suggest using docker-compose only for running the development infrastructure using the following command.

docker-compose -f docker-compose.yml up -d

-

Backend Services: Import backend Spring Boot projects individually into your preferred IDE for java. My recommendation goes for Intellij IDEA. Then run with it's built-in application runner.

-

Frontend: Any code editor with support for Biome should work. I recommend using vscode or cursor.

You can run everything inside docker with hot reload support by running following command. (This is experimental and not recommended.)

docker-compose -f docker-compose.local.yml up --buildFor API documentation please visit the URLs while running the project locally.

| Service | OpenAPI Documentation |

|---|---|

| Auth Service | http://localhost:8080/swagger-ui/index.html |

| Agent Service | http://localhost:8100/swagger-ui/index.html |

| Chat Service | http://localhost:8200/swagger-ui/index.html |

| Analytics Service | Not implemented yet. See the chat services for now. |

This project was developed with the extensive use of an AI programming assistant.

-

Tool Used:

- GitHub Copilot

- Chat GPT

- Claude

-

Estimated Time Saved:

- 10-12 hours.

- The AI assistant was instrumental in bootstrapping the entire Docker environment, generating multi-stage Dockerfiles, creating .dockerignore files, writing the docker-compose.yml configuration, debugging container networking issues, and generating this README.md file. This significantly reduced the time spent on configuration and boilerplate code.

-

Example of a Helpful Prompt:

- "This repository has 2 nextjs app at /frontends and 3 spring boot project at /backends. Backend relies on a posgres database. All the backend and forntend projects has their related .env files. At the root of this repository there is another .env file where the postgres user and password is added. Now I need to update this docker-compose file to run everything with one docker-compose up command."

-

Challenge Faced & AI Solution:

- A significant challenge was the "Connection Refused" error between the backend services and the PostgreSQL database. The AI assistant correctly identified that depends_on in Docker Compose only waits for the container to start, not for the application inside to be ready. It proposed the solution of adding a healthcheck to the postgres service and updating the backend services to use depends_on: { condition: service_healthy }, which completely solved the race condition.