-

Notifications

You must be signed in to change notification settings - Fork 4.4k

Description

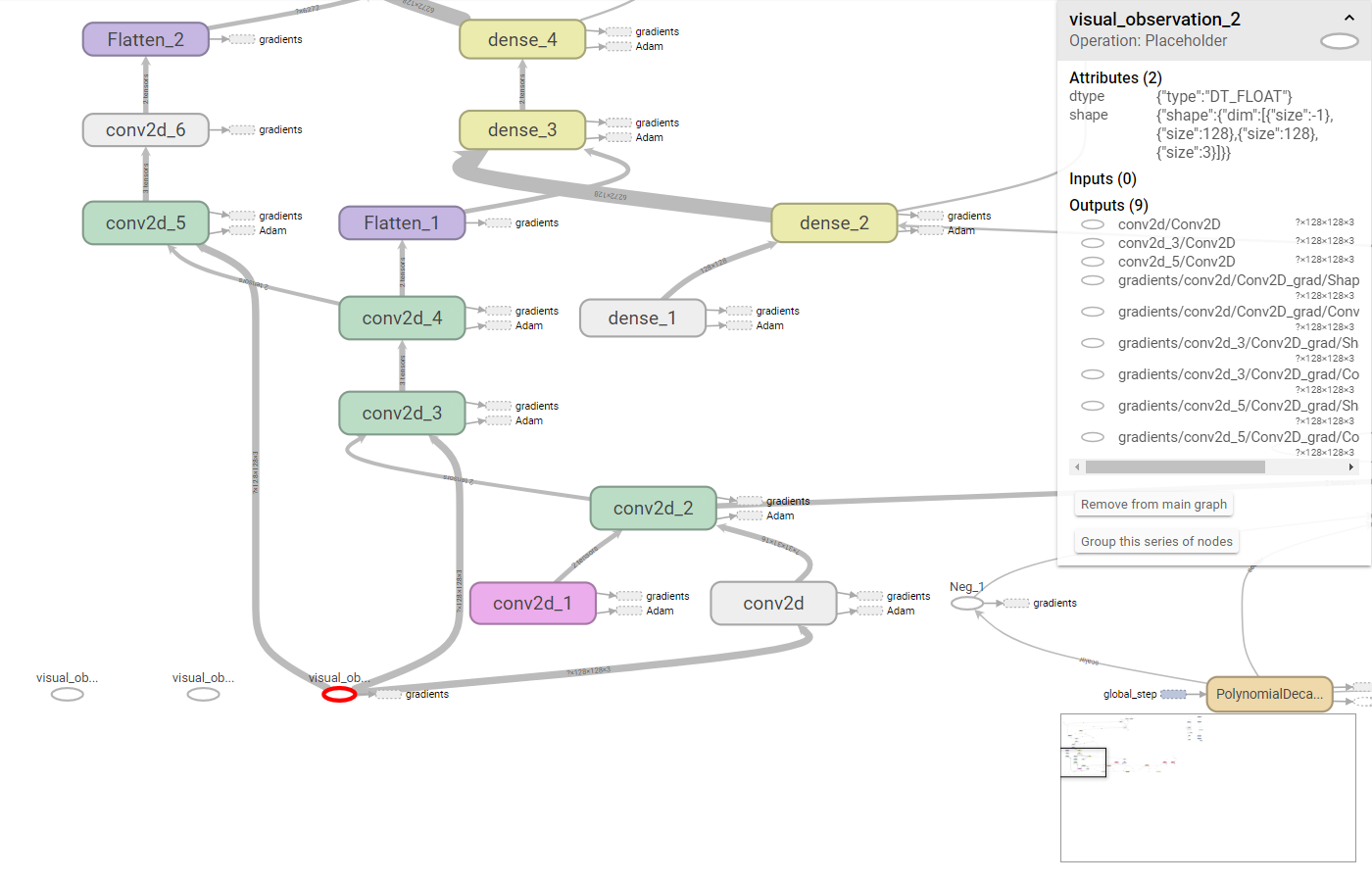

I needed a visualization of the default neural network that is used by the PPO model, so I tried to plot the computation graph with TensorFlow. In my environment, I use three camera observations per brain. It was then that I noticed that all convolutional layers of the network are connected to the same visual_observation_2 node and that the other two nodes are not connected to any outputs.

I'm a beginner in TensorFlow, but I doubt this setup is correct. I had a look at the create_visual_encoder method in the models.py file and found this line:

conv1 = tf.layers.conv2d(self.visual_in[-1], 16, kernel_size=[8, 8], strides=[4, 4], activation=tf.nn.elu)

Here it wraps a convolutional layer around the last item in visual_in, which seems incorrect to me if there are multiple visual observations. I hope someone can tell me whether this is a real error in the code, or if I missed something else which explains using only the last item of visual_in.