This is a PyTorch implementation of DisCor[1] and Soft Actor-Critic[2,3]. I tried to make it easy for readers to understand the algorithm. Please let me know if you have any questions.

If you are using Anaconda, first create the virtual environment.

conda create -n discor python=3.8 -y

conda activate discorThen, you need to setup a MuJoCo license for your computer. Please follow the instruction in mujoco-py for help.

Finally, you can install Python liblaries using pip.

pip install --upgrade pip

pip install -r requirements.txtIf you're using other than CUDA 10.2, you need to install PyTorch for the proper version of CUDA. See instructions for more details.

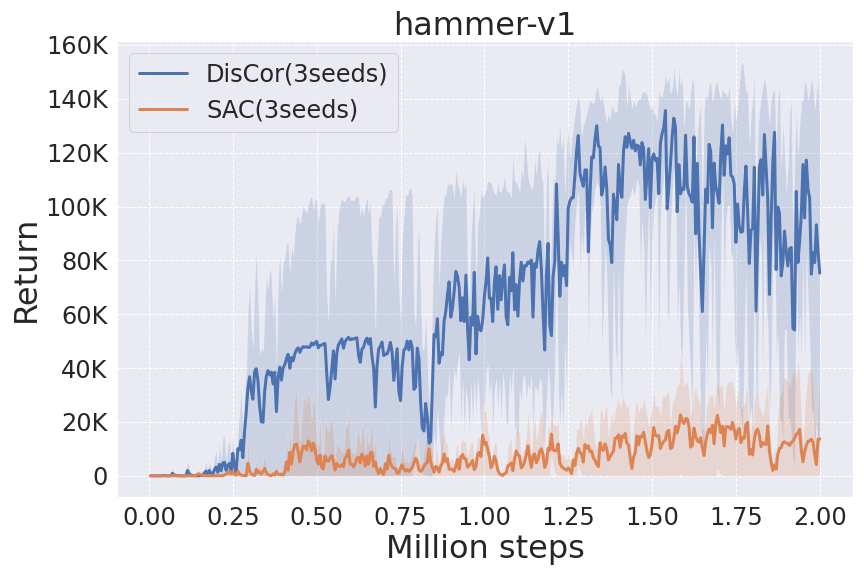

First, I trained DisCor and SAC on hammer-v1 from MetaWorld tasks as below. Following the DisCor paper, I visualized success rate in addition to test return. These graphs correspond to Figure 7 and 16 in the paper.

python train.py --cuda --env_id hammer-v1 --config config/metaworld.yaml --num_steps 2000000 --algo discorI trained DisCor and SAC on Walker2d-v2 from Gym tasks as below. A graph corresponds to Figure 17 in the paper.

python train.py --cuda --env_id Walker2d-v2 --config config/mujoco.yaml --algo discor[1] Kumar, Aviral, Abhishek Gupta, and Sergey Levine. "Discor: Corrective feedback in reinforcement learning via distribution correction." arXiv preprint arXiv:2003.07305 (2020).

[2] Haarnoja, Tuomas, et al. "Soft actor-critic: Off-policy maximum entropy deep reinforcement learning with a stochastic actor." arXiv preprint arXiv:1801.01290 (2018).

[3] Haarnoja, Tuomas, et al. "Soft actor-critic algorithms and applications." arXiv preprint arXiv:1812.05905 (2018).