|

1 | | - |

2 | | - |

| 1 | + |

| 2 | + |

3 | 3 |

|

4 | 4 |  |

5 | 5 |  |

6 | 6 |  |

7 | 7 |  |

8 | 8 | # Welcome 👋 |

9 | 9 |

|

10 | | -**Description**: |

11 | | -*DataTunerX*, short for *DTX*, is a powerful tool. |

| 10 | +***DataTunerX (DTX)*** is designed as a cloud-native solution integrated with distributed computing frameworks. Leveraging scalable *GPU* resources, it's a platform built for efficient fine-tuning *LLMs* with a focus on practical utility. Its core strength lies in facilitating batch fine-tuning tasks, enabling users to conduct multiple tasks concurrently within a single ***experiment***. ***DTX*** encompasses essential capabilities such as ${\color{#D8CBBE}dataset \space management}$, ${\color{#BDE7BD}hyperparameter \space control}$, ${\color{#F1A7A7}fine-tuning \space workflows}$, ${\color{#BADBF4}model \space management}$, ${\color{#F4CEDB}model \space evaluation}$, ${\color{#D2E3EE}model \space comparison \space inference}$, and a ${\color{#F9E195}modular \space plugin \space system}$. |

12 | 11 |

|

13 | | -**Technology stack**: |

14 | | -Built using *Go*. |

| 12 | +**Technology stack**: |

| 13 | + |

| 14 | +***DTX*** is built on cloud-native principles, employing a variety of [*Operators*](https://www.redhat.com/en/topics/containers/what-is-a-kubernetes-operator) that consist of distinct *Custom Resource Definitions (CRDs)* and *Controller* logic. Developed primarily in *Go*, the implementation utilizes the [*operator-sdk*](https://github.com/operator-framework/operator-sdk) toolkit. Operating within a [*Kubernetes (K8s)*](https://github.com/kubernetes/kubernetes) environment, ***DTX*** relies on the operator pattern for *CRD* development and management. Furthermore, ***DTX*** integrates with [*kuberay*](https://github.com/ray-project/kuberay) to harness distributed execution and inference capabilities. |

15 | 15 |

|

16 | 16 | **Status**: |

| 17 | + |

17 | 18 | *Alpha (v0.1.0)* - Early development phase. [CHANGELOG](CHANGELOG.md) for details on recent updates. |

18 | 19 |

|

19 | | -**Links to production or demo instances**: |

| 20 | +**Quick Demo & More Documentation**: |

20 | 21 |

|

21 | | -[Demo Instance](https://github.com/DataTunerX/datatunerx-controller) (COMING SOON) |

| 22 | +- [Demo](https://github.com/DataTunerX/datatunerx-controller) (COMING SOON) |

22 | 23 |

|

23 | | -[Documentation](https://github.com/DataTunerX/datatunerx-controller) (COMING SOON) |

| 24 | +- [Documentation](https://github.com/DataTunerX/datatunerx-controller) (COMING SOON) |

| 25 | + |

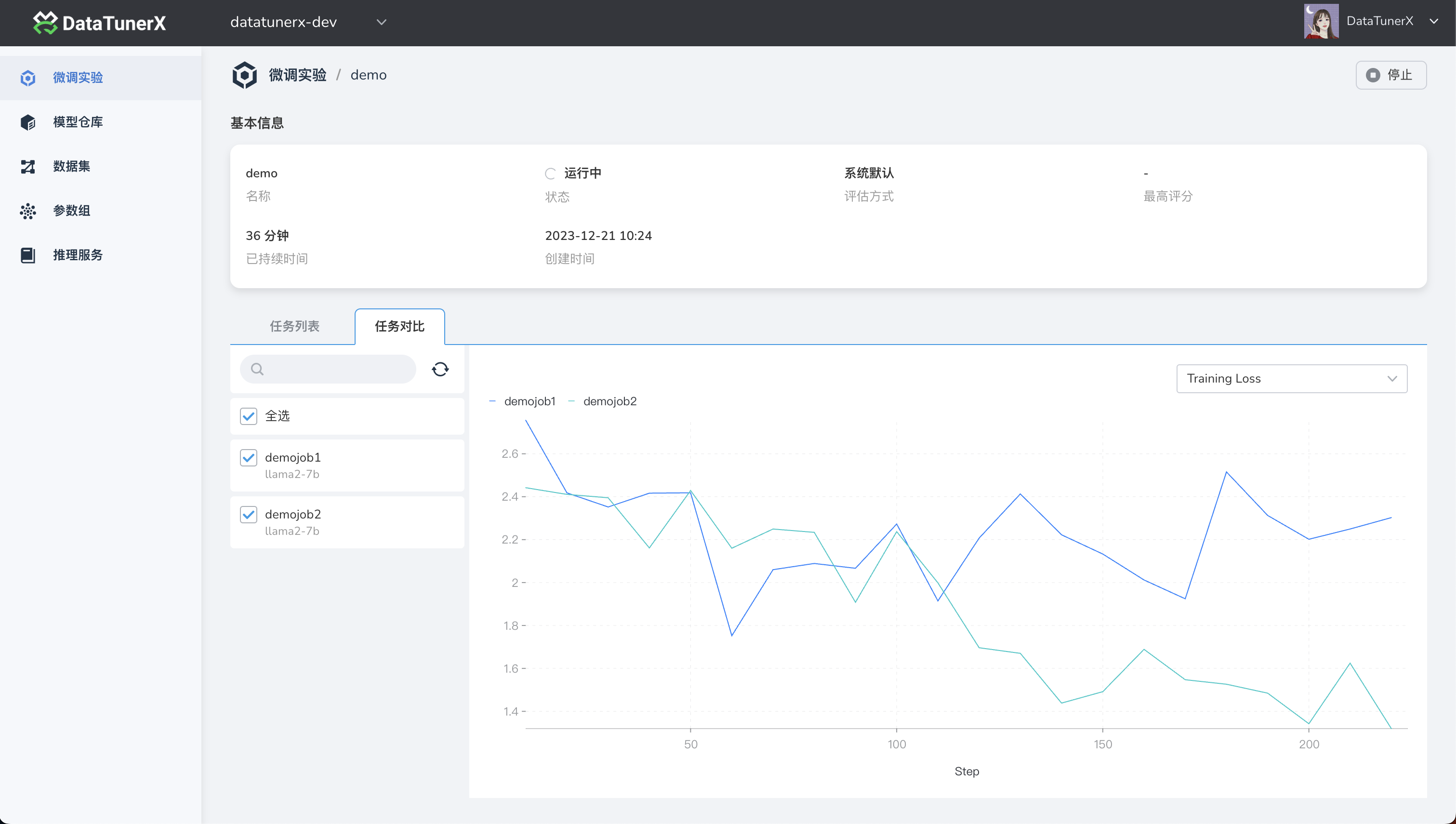

| 26 | +**Screenshot**: |

24 | 27 |

|

25 | | -**Screenshot**: |

26 | 28 |  |

27 | 29 |

|

28 | | -# Dependencies 🖇️ |

| 30 | +# What DTX can do? 💪 |

29 | 31 |

|

30 | | -Describe any dependencies that must be installed for this software to work. This includes programming languages, databases, build tools, etc. Specify versions if necessary. |

| 32 | +***DTX*** empowers users with a robust set of features designed for efficient fine-tuning of large language models. Dive into the capabilities that make ***DTX*** a versatile platform: |

31 | 33 |

|

32 | | -# Installation 📦 |

| 34 | +## 1. Dataset Management 🗄️ |

| 35 | +Effortlessly manage datasets by supporting both *S3* protocol (*http* is coming) and local dataset uploads. Datasets are organized with splits such as test, validate, and training. Additionally, feature mapping enhances flexibility for fine-tuning jobs. |

33 | 36 |

|

34 | | -Detailed instructions on how to install, configure, and run the project are available in the [*INSTALL*](INSTALL.md) document. |

| 37 | +## 2. Fine-Tuning Experiments 🧪 |

| 38 | +Conduct fine-tuning experiments by creating multiple fine-tuning jobs. Each job can employ different llms, datasets, and hyperparameters. Evaluate the fine-tuned models uniformly through the experiment's evaluation unit to identify the fine-tuning results. |

| 39 | +<div align="center"> |

| 40 | + <img src="https://raw.githubusercontent.com/DataTunerX/datatunerx-controller/main/assets/design/finetune.png" alt="FineTune" width="30%" /> |

| 41 | + <img src="https://raw.githubusercontent.com/DataTunerX/datatunerx-controller/main/assets/design/finetunjobe.png" alt="FineTuneJob" width="30%" /> |

| 42 | + <img src="https://raw.githubusercontent.com/DataTunerX/datatunerx-controller/main/assets/design/finetuneexperiment.png" alt="FineTuneExperiment" width="30%" /> |

| 43 | +</div> |

| 44 | + |

| 45 | +## 3. Job Insights 📊 |

| 46 | +Gain detailed insights into each fine-tuning job within an experiment. Explore job details, logs, and metric visualizations, including learning rate trends, training loss, and more. |

| 47 | + |

| 48 | +## 4. Model Repository 🗃️ |

| 49 | +Store llms in the model repository, facilitating efficient management and deployment of inference services. |

| 50 | + |

| 51 | +## 5. Hyperparameter Group Management 🧰 |

| 52 | +Utilize a rich parameter configuration system with support for diverse parameters and template-based differentiation. |

35 | 53 |

|

36 | | -# Configuration ⚙️ |

| 54 | +## 6. Inference Services 🚀 |

| 55 | +Deploy inference services for multiple models simultaneously, enabling straightforward comparison and selection of the best-performing model. |

37 | 56 |

|

38 | | -If the software is configurable, describe the configuration options in detail, either here or in other linked documentation. (COMING SOON) |

| 57 | +## 7. Plugin System 🧩 |

| 58 | +Leverage the plugin system for datasets and evaluation units, allowing users to integrate specialized datasets and evaluation methods tailored to their unique requirements. |

| 59 | + |

| 60 | +## 8. More Coming 🤹♀️ |

| 61 | +DTX offers a comprehensive suite of tools, ensuring a seamless fine-tuning experience with flexibility and powerful functionality. Explore each feature to tailor your fine-tuning tasks according to your specific needs. |

| 62 | + |

| 63 | +# Why DTX? 🤔 |

| 64 | + |

| 65 | +# Architecture 🏛️ |

| 66 | + |

| 67 | +Introducing the architectural design provides an overview of how DataTunerX is structured. This includes details on key components, their interactions, and how they contribute to the system's functionality. |

| 68 | + |

| 69 | +# Installation 📦 |

| 70 | + |

| 71 | +Detailed instructions on how to install, configure, and run the project are available in the [*INSTALL*](INSTALL.md) document. |

39 | 72 |

|

40 | 73 | # Usage 🖥️ |

41 | 74 |

|

|

0 commit comments